Project details

| Project lead | John Speakman |

|---|---|

| Centre alignment | Centre for Blended Realities |

| Start date | 2019 |

| End date | Ongoing |

Does distributed mixed reality, incorporating interaction with artificial life, improve usability and engagement in cooperative virtual environment design?

The project uses an Artificial Life (AL) species in a shared virtual habitat to sustain engagement and facilitate co-creation, exploring a novel approach to social and human-computer interaction. Thereby, elucidating guidelines for software developers and practitioners.

The research objectives are to:

- Provide the first benchmark for distributed content creators and remote workers to determine if the use of MR is appropriate for collaborative virtual environment design.

- Determine the impact a mixed reality interface has on the usability of virtual environment design software.

- Introduce the first assessment of the capability of AL systems to effectively drive engagement with end users in a virtual environment design activity mediated by mixed reality.

- Synthesize the first guidelines to determine the appropriateness of distributed MR and AL for co-creative projects.

- Introduce a novel Artificial Intelligence (AI) paradigm, initially designed for the Artificial Life model demonstrated in this paper, which has also shown to deliver a novel approach to the automatic synthesis of source code and demonstrated its utility outside of the evolution of behavioural adaptations.

The increasing ubiquity of MR devices has brought greater attention to the application of the technology in professional and consumer markets. While comparisons between MR and non-MR interfaces have already been conducted (Jacob, et al., 2008; Blattgeste, et al., 2017; Wang & Dunston, 2013), only a small number of contexts have been explored. There are two key gaps in the literature. Firstly, there is no existing study on the use of distributed mixed reality for co-creative practice. Secondly, there are no studies on human interaction with evolving AL in a mixed reality context.

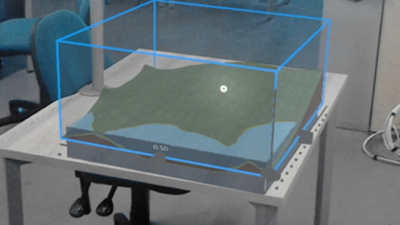

To derive the data necessary for this study, a new software application for Microsoft's HoloLens, an HMD MR device, has been produced. Using this software, people can apply restricted modifications to a Collaborative Virtual Environment (CVE) and associated environmental objects using a series of tools. This environment is rendered through the HMD MR device. The CVE is positioned as an overlay to the user's real-world environment, fitting the virtual environment into the real-world geometry.

This distributed environment is viewed by multiple people simultaneously, regardless of their geographic location. The MR HMD projects this environment into the most suitable location and scale for the local physical space (i.e. the room the person is in). This is updated in real-time such that the impact of each person's actions in the shared MR environment is experienced by everyone else sharing that environment via the Internet.

An AL species occupies this shared MR environment, interacting with elements of the virtual world and evolving through Template Based Evolution (TBE). This algorithm is selected due to its evolutionary speed, its adaptation to human input, and enactive behaviour (cognition arising from dynamic interactions between agents and their environments). Loor (Loor, et al., 2009) anticipated the use of this type of algorithm in a mixed-reality context, suggesting the increase in human immersion is necessary for evolutionary species to sufficiently adapt to human behaviour.

Project team

John Speakman - Project lead

John Speakman is a Research Student Teaching Associate in the Games Academy at Falmouth University. John specialises in artificial intelligence, intelligent agents, autonomous agent behaviour, geometry, data visualisation and human-computer interaction. Find out more about John's experience, research interests, projects and outputs by visiting his staff profile.

Staff profileOutcomes & outputs

A series of simple objectives, with measurable outcomes, will be presented to teams of practitioners. These focus on environmental design. Each member of the team can interact with the species through the virtual environment in order to achieve these tasks. The virtual species is a means to evaluate designs, be creatively disruptive, and sustain engagement. This is realised through continuous collaborative environmental modification as it becomes necessary to keep the species alive.

Interpersonal communication will be limited to gestures (McNeill, 2000) and visualisation of other users' relative positions within the CVE. This drives creative interaction, using the environment as a communicative tool.

Impact & recognition

Expanding the field of artificial intelligence, this project delivers a novel approach to the automatic generation of code, providing a new method for the automatic generation of fully functional software, capable of automatically solving a range of software and mathematical problems. This project also provides the first exploration into the application of mixed reality into co-creative practices from the perspective of usability and the first application of Artificial Life within this medium.

The potential for the Automatic generation of code may lead to a paradigm shift in the software development industry by significantly reducing the amount of human written code in general software applications and changing the role of programmers. Broadening the understanding of usability and developmental practices in Mixed reality may lead to greater adoption of Mixed Reality software, particularly for applications facing the consumer market.

The outcomes of this project are primarily aimed towards software developers, particularly those looking for highly efficient automatic code or autocoder solutions, developers of mixed reality solutions and researchers into the development of artificial life and human collaboration in mixed reality.

The autocoder reduces development overhead and developmental costs, which potentially could be adopted on a relatively universal scale in the development of software applications and opens the potential to develop solutions to complex coding problems, automatically. As mixed Reality headset devices become standardised and approach mass production, the development of standardised software development guidelines and the exploration into the understanding of usability becomes a significant concern, particularly as the medium approaches the consumer market.

An example use case for the novel approach to the automatic generation of code has been seen in 3 published research papers, as seen below. This includes the application of the core autocoder in the generation of music with a live human audience guiding the algorithms evolution of music generation.

Associated published research papers:

John Speakman "Evolving Source Code: Object Oriented Genetic Programming in .NET Core"

N. Lorway, E. Powley, A. Wilson, J. A. Speakman, and M. Jarvis, Autopia: An AI Collaborator for Live Coding Music Performances, presented at the International Conference on Live Coding, University of Limerick, Ireland, (November 2019).